Ceph multi-node cluster

Contents

21. Ceph multi-node cluster¶

21.1. Start with a 3-node cluster¶

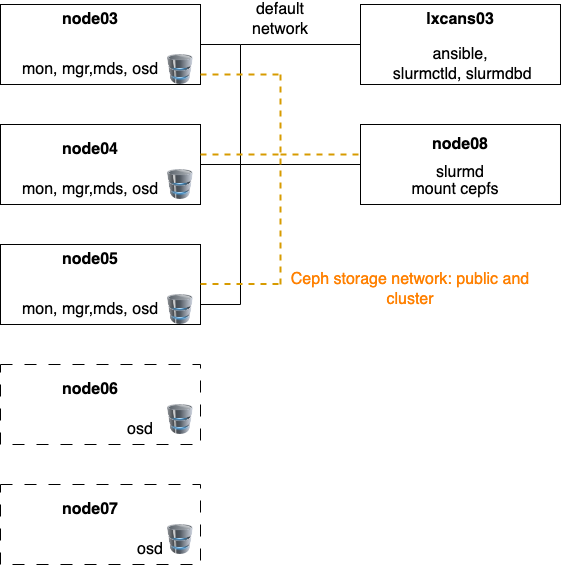

We are going to start with 3-node Ceph cluster: node03, 04, 05 will run Ceph monitor, manager, metadata, and osd daemons. Later, we may add node06 and node07 as metadata nodes.

The basic node communications, including SSH and SLURM, will run on the original network, 192.168.5.0/24

The Ceph public and cluster network will run on the infiniband network, 192.168.7.0/24

The cephfs will be mounted on node08. The benchmarking applications, including

fiowill run on node08.The LXC container, lxcans03, will be used as the Ansible manager and SLURM manager.

21.2. Benchmarks to run¶

Create replication and erasure pools.

Run

fioon the mounted file system on node08.

21.3. Disaster recovery modelling¶

While running applications with I/O on the mounted cephfs, fail OSD and one of the nodes.

See how the mounted file system behaves in terms of availability and performance.