Adding cluster network to your Ceph nodes

Contents

18. Adding cluster network to your Ceph nodes¶

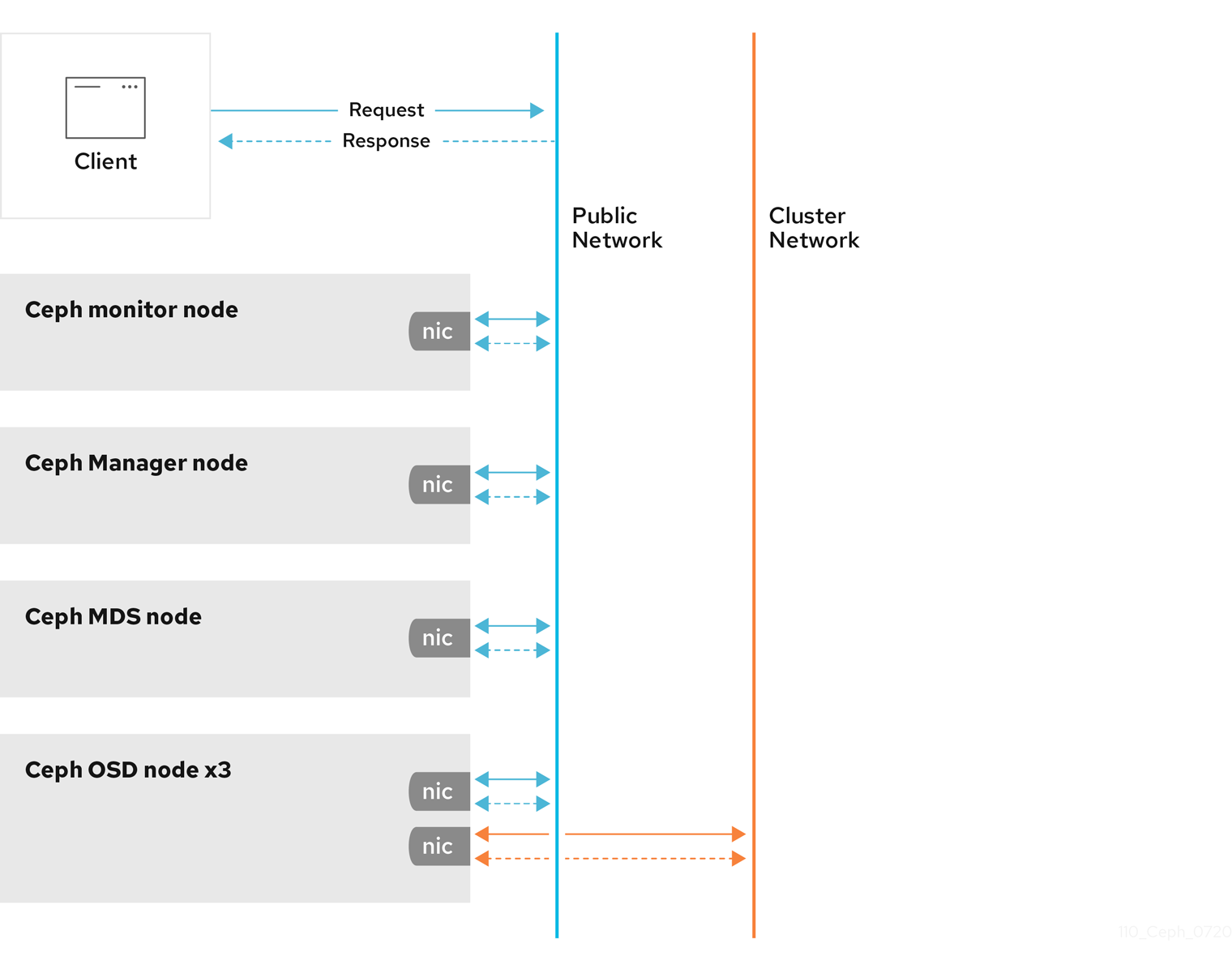

The cluster network is needed to rebalance the placement groups of data objects between the OSDs across the nodes.

18.1. Enable the second network interface on the nodes.¶

We need to add the configuration for eno2 in file /etc/network/interfaces.

It is easeir to do by using Ansible. Run the procedures below on your lxsans container.

Add a new host group into your inventory file, hosts.ini, for example:

hosts.ini

[nic_nodes]

node10

node11

These are the nodes we’ll be playing the NIC configuration tasks in the new playbook, second_nic.yml:

---

- name: enable 2nd network interface

hosts: nic_nodes

tasks:

- name:

ansible.builtin.blockinfile:

path: /etc/network/interfaces

block: |

auto eno2

iface eno2 inet dhcp

- name:

ansible.builtin.command: ifup eno2

Make sure you can ssh to the both nodes and run sudo without password. Otherwise, set passwordless ssh and sudo like in #15.

Run the playbook:

ansible-playbook second_nic.yml

Check if you can ping the nodes by their second hostname, for example:

ping -c3 node10s

ping -c3 node11s

If they respond, you should be able to add the cluster network to the nodes.

18.2. Add the cluster network in the configuration.¶

In your /etc/ceph/ceph.conf on the both nodes, add the line below after the public_network line:

add in /etc/ceph/ceph.conf:

cluster_network = 192.168.6.0/24

Restart the OSD daemons on the both nodes.

For example, on the first node:

sudo systemctl restart ceph-osd@0.service

sudo systemctl restart ceph-osd@1.service

sudo systemctl restart ceph-osd@2.service

On the second node:

sudo systemctl restart ceph-osd@3.service

sudo systemctl restart ceph-osd@4.service

sudo systemctl restart ceph-osd@5.service

Check the cluster status:

ceph -s

18.3. Check OSD communication between the nodes.¶

On the first node, for example node11, run command lsof to check incoming connections from the second node storage IP address.

For example, on node10s

lsof -i TCP@node10s

It shows the following:

lsof output:

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

ceph-osd 35026 ceph 73u IPv4 44286 0t0 TCP node11s:6802->node10s:53906 (ESTABLISHED)

ceph-osd 35026 ceph 74u IPv4 44289 0t0 TCP node11s:57842->node10s:6802 (ESTABLISHED)

ceph-osd 35026 ceph 76u IPv4 38349 0t0 TCP node11s:33274->node10s:6800 (ESTABLISHED)

ceph-osd 35463 ceph 73u IPv4 83319 0t0 TCP node11s:6806->node10s:47796 (ESTABLISHED)

ceph-osd 35463 ceph 74u IPv4 83322 0t0 TCP node11s:57832->node10s:6802 (ESTABLISHED)

ceph-osd 35463 ceph 76u IPv4 81719 0t0 TCP node11s:33270->node10s:6800 (ESTABLISHED)

ceph-osd 35936 ceph 72u IPv4 79181 0t0 TCP node11s:6810->node10s:55978 (ESTABLISHED)

ceph-osd 35936 ceph 74u IPv4 79187 0t0 TCP node11s:33266->node10s:6800 (ESTABLISHED)

ceph-osd 35936 ceph 75u IPv4 18399 0t0 TCP node11s:57828->node10s:6802 (ESTABLISHED)

Btw, node10s has IP address on the cluster network, 192.168.6.10.

Let’s find out what applications use some of the ports on node10s.

On node10, install package net-tools

sudo apt install net-tools

For example, check what application owns port tcp/55978 on node11.

sudo netstat -nalp | grep 55978

It shows ceph-osd:

netstat output:

tcp 0 0 192.168.6.10:55978 192.168.6.11:6810 ESTABLISHED 7869/ceph-osd

The network communication between OSDs goes on the cluster network, 192.168.6.0/24