Network File System (NFS)

Contents

6. Network File System (NFS)¶

6.1. Outline of the NFS topic¶

Network File System (NFS) sharing.

Services involved in NFS

File system exporting and mounting

NFS server setup on the node

NFS client setup on the container

File access permissions on a server

Automount

6.2. File system sharing over network¶

NFS defines a method of sharing files in which files residing on one or more remote servers can be accessed on a local client system in a manner that makes them appear as local files and directories.

6.3. NFS versions¶

NFS was originally developed by Sun Microsystems:

NFSv2 released in 1985 (no longer supported on Ubuntu and RedHat) NFSv3 released in 1995 NFSv4 released in 2003 (developed by Internet Engineering Task Force (IETF)) NFSv4.1 released in 2010 NFSv4.2 released in 2016

Detailed info about the NFS versions for Linux is available at on Wiki (Links to an external site.) NFS support should be enabled in Linux kernel. Check the kernel config file for ‘CONFIG_NFS’:

grep CONFIG_NFS /boot/config-*

output

CONFIG_NFS_V2=m

CONFIG_NFS_V3=m

CONFIG_NFS_V3_ACL=y

CONFIG_NFS_V4=m

CONFIG_NFS_V4_1=y

CONFIG_NFS_V4_2=y

CONFIG_NFS_V4_1_IMPLEMENTATION_ID_DOMAIN="kernel.org"

CONFIG_NFS_V4_1_MIGRATION=y

CONFIG_NFS_V4_SECURITY_LABEL=y

6.4. File system virtualization¶

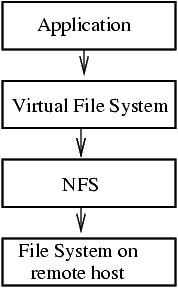

VFS is an abstraction of a local file system provided by Kernel for an application.

Platform independent.

Preserves or emulates Unix file system semantics.

6.5. Example of physical file locations¶

In a python script we may use files referenced by their full path:

f1 = open("/home/hostadm/python/parameters.txt","w")

f2 = open("/NFS/python/parameters.txt","w")

Files f1 and f2 can physically reside anywhere, on the local file system or NFS.

The application doesn’t care. It is the task of the VFS to provide access to the files by their full path.

6.6. NFS and RPC¶

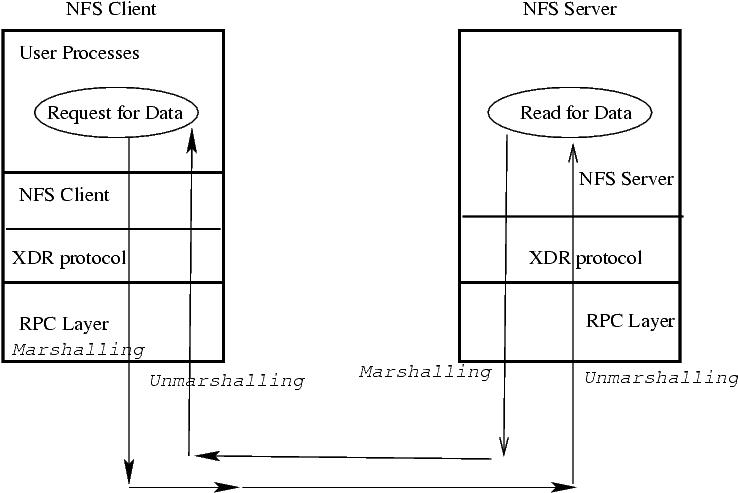

NFS utilizes Remote Procedure Calls (RPC) layer for server – client communications.

Marshalling - Packaging arguments in XDR (eXternal Data Representation) format.

XDR format is platform independent

RPC allows applications on one host to call procedures (functions) on the other remote host

RPC allows a server to respond to more than one version of a protocol at the same time (NFS 4 or 3).

6.7. NFS in the 7 layer OSI tcp/ip protocol¶

NFS, XDR and RPC fit into the top 3 layes of the OSI model.

XDR translates DATA into canonical (platform independent) format

RPC provides remote procedure calls that appear as local processes.

6.8. NFS server daemons¶

Daemons are processes running on a server and providing some services.

Several daemons are involved in NFS.

The same server can offer NFSv4 and NFSv3 file system access. It is up to the client to decide which version to use.

NFS version 3 server daemons: |

NFS version 4 server daemons: |

|---|---|

rpcbind handles RPC requests and registers ports for RPC services. |

(Unnecessary in NFSv4. Good to have for diagnostics.) |

rpc.mountd handles the initial mount requests. |

rpc.mountd handles the initial mount requests. |

nfsd or [nfsd] handles data streaming. |

nfsd or [nfsd] handles data streaming. |

rpc.rquotad handles user file quotas on exported volumes. |

|

rpc.lockd handles file locking to make sure several processes don’t write into the same file. |

|

rpc.statd Interacts with the rpc.lockd daemon and provides crash and recovery for the locking services. |

|

rpc.idmapd handles user and group mapping (optional). |

To verify that the services have started and registeresd with rpcbind, run command

rpcinfo -p

To see rpc services on a remote host, for example with IP address 192.168.5.3:

rpcinfo -p 192.168.5.3

6.9. NFS client daemons¶

NFS version 3 client daemons |

NFS version 4 client daemons |

|---|---|

rpcbind |

rpcbind (unnecessary) |

rpc.lockd |

|

rpc.statd |

|

rpc.idmapd (optional) |

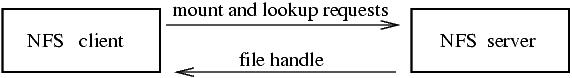

6.10. NFS mount and file handle¶

An NFS client receives file handles from NFS server when executes mount and lookup calls.

The file handles on a client relate to the file pointers on an NFS server (inode number, disk device number, and inode generation number).

If the NFS server crashes or reboots, NFS dependent applications on the client hang and then continue running after the server becomes available.

If the file system on the server is changed or corrupted, the client gets a stale file handle error.

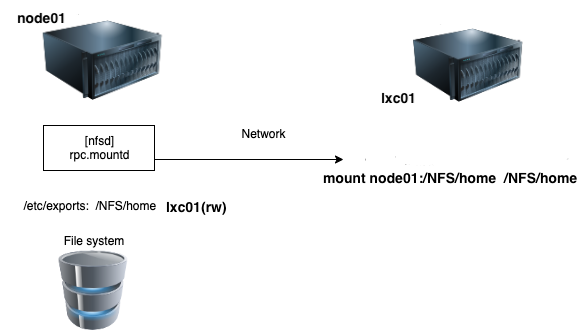

6.11. Export/mount directory over NFS¶

NFS server exports a directory and NFS client mounts it.

NFS server may run several versions of NFS, for example, NFSv3, and NFSv4.

NFS client chooses the NFS version at the mount time.

6.12. NFS server configuration (Exercises)¶

Install NFS server packages on the node server by following the instructions below.

apt install rpcbind nfs-common nfs-kernel-server

Create a directory to export:

mkdir -p /tank/scratch

Modify file /etc/exports to include file sharing with your container. Put the corrrect number for your container:

file content

/tank/scratch lxc01(rw)

Make sure host lxc01 is reachable from the node:

ping -c 2 lxc01

Restart the NFSv4 related service:

systemctl restart nfs-kernel-server

Make sure the services are running by executing command rpcinfo:

rpcinfo -p

You should see the following:

output

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

100024 1 udp 41147 status

100024 1 tcp 50021 status

100005 1 udp 45022 mountd

100005 1 tcp 47677 mountd

100005 2 udp 43482 mountd

100005 2 tcp 60947 mountd

100005 3 udp 50653 mountd

100005 3 tcp 36245 mountd

100003 3 tcp 2049 nfs

100003 4 tcp 2049 nfs

100227 3 tcp 2049

100003 3 udp 2049 nfs

100227 3 udp 2049

100021 1 udp 40359 nlockmgr

100021 3 udp 40359 nlockmgr

100021 4 udp 40359 nlockmgr

100021 1 tcp 37625 nlockmgr

100021 3 tcp 37625 nlockmgr

100021 4 tcp 37625 nlockmgr

Run command below to see what and how is exported by the NFS server:

showmount -e

6.13. NFS client configuration (Exercises)¶

Install the NFS related packages by using apt on the LXC server:

apt install nfs-common

Run command rpcinfo pointing at the NFS server. Use the correct node number instead of 01:

rpcinfo -p node01

If you see the same output as on the NFS server, it means the server allows you to access the rpcbind and the rpc services. Check what directories are exported to you from the server:

/sbin/showmount -e node01

It should show:

output

/tank/scratch lxc01

Now you are ready to mount its directory on lxc01.

6.15. NFS mount on a client (Exercises)¶

Create a new mounting point and mount the exported directory onto it via NFS:

mkdir -p /tank/scratch

mount node01:/tank/scratch /tank/scratch

To make sure the directory has been mounted, run command

mount

Run also

df -h

The mounted directory shows up in the bottom of the file systems list:

node01:/tank/scratch 3.9G 1.5G 2.3G 39% /tank/scratch

Unmount the directory,

umount /tank/scratch

Modify file /etc/fstab

nano /etc/fstab

and include a new entry with /tank/scratch:

/etc/fstab

node01:/tank/scratch /tank/scratch nfs rw 0 0

Then run

mount -a

Check if it is mounted

df -h

Reboot lxc01 container , login to the container , and make sure /tank/scratch gets mounted:

df -h

6.16. File access on NFS by uid match (Exercise)¶

On the NFS server, node01 container , create a new user with home directory in

/tank/scratch:

/usr/sbin/groupadd -g 1666 edward

/usr/sbin/useradd -m -s /bin/bash -u 1666 -g 1666 -d /tank/scratch/edward edward

Copy some files from /etc into directory /tank/scratch/edward and give them ownership “edward”:

cp /etc/hosts /tank/scratch/edward

cp /etc/nsswitch.conf /tank/scratch/edward

chown edward:edward /tank/scratch/edward/*

Then

cp /etc/group /tank/scratch/edward

and live it with root ownreship.

On the NFS client container , lxc01, run command

ls -l /tank/scratch/edward

Since there is no user with UID=1666 and GID=1666 on the node, the mounted directory would belong to a non-existent user:

ls -l /tank/scratch/edward

total 5

-

-rw-r--r-- 1 1666 1666 104 Feb 10 19:32 hosts

-rw-r--r-- 1 1666 1666 1750 Feb 10 19:32 nsswitch.conf

-rw------- 1 root root 114 Feb 10 2003 group

Create user edward with UID=GID=1667:

/usr/sbin/groupadd -g 1667 edward

/usr/sbin/useradd -s /bin/bash -u 1667 -g 1667 -d /tank/scratch/edward edward

Assign password to the user:

passwd edward

Now try to change the ownership of the directory on the node:

chown edward:edward /tank/scratch/edward

It doesn’t work:

chown: changing ownership of `/tank/scratch/edward': Operation not permitted

Change the UID and GID of edward to be consistent with those on the NFS server:

/usr/sbin/groupmod -g 1666 edward

/usr/sbin/usermod -u 1666 -g 1666 edward

Become user edward then step into directory /tank/scratch:

su edward

cd /tank/scratch/edward

and see if you can create files in this directory:

touch newfile.txt

Exit from user edward account:

exit

6.17. Unmounting busy directories (Exercises)¶

Open another terminal on your desktop and ssh to lxc01 as user edward.

ssh edward@lxc01

Also ssh as hostadm to lxc01,

become ‘root’ via sudo -s, and try to unmount the directory:

umount /tank/scratch

If the directory can not get unmounted and you receive error message “device is busy”, check what processes hold the directory by executing command lsof +D on the file system. Specifically, in our case:

lsof +D /tank/scratch

Kill the process, for example with PID 1367, and try to unmount the directory again.

kill -9 1367

umount /tank/scratch

Comment the NFS entry in /etc/fstab file:

/etc/fstab

# node01:/tank/scratch /tank/scratch nfs rw 0 0

Try to avoid NFS mounting through /etc/fstab. Use either manual mount or automount.

6.18. Automount (Exercises)¶

Install autofs on lxc01 (NFS client).

apt install autofs

Make sure /tank/scratch directory is unmounted:

df -h

If it shows in the list of mounted file systems, unmount it:

umount /tank/scratch

Remove directory /tank/scratch:

rmdir /tank/scratch

Configure the node01 map file, /etc/auto.master, and specify the timeout 60 seconds. The content of file /etc/auto.master should be the following:

/etc/auto.master:

/tank /etc/auto.exports --timeout=30

Configure the exports map file, /etc/auto.exports:

/etc/auto.exports:

scratch -rw node01:/tank/scratch

Restart or reload autofs:

systemctl restart autofs

Access the file system and check if it gets mounted:

cd /tank/scratch

df -h

Step out of the directory:

cd

Run command df -h again in about two minutes to see if it gets unmounted automatically after the inactivity period.

6.19. Stale NFS file handle (Exercise)¶

On the NFS server, node01, create a new directory tree under NFS exported directory:

mkdir -p /tank/scratch/test/demo

On the client, lxc01, step into the directory:

cd /tank/scratch/test

ls

On the NFS server, node01, remove directory test with its subdirectory:

cd /tank/scratch

rm -rf test

On the client, run

ls

It should give you the following error:

ls: cannot open directory .: Stale NFS file handle

Step out of the NFS mounted directory:

cd /

The autofs will unmount the NFS directory after the inactivity period, one minute in our case. Next time the NFS is mounted, it will contain the updated directory tree.