3. Virtualization¶

Introduction to operating system virtualization

Virtualization types

KVM virtualization

VM CLI management tool: virsh

VM cloning

VM removal

Virtual drive access

VM appliance deployment

References:

KVM and QEMU. (Explains well how the KVM module and QEMU work together)

3.1. What is virtualization¶

In computing, a virtualization is the facility that allows multiple operating systems (VMs) to run simultaneously on a computer in a safe and efficient manner.

Virtualization purposes:

Application development and testing on a separate system,

Server consolidation on one platform,

Virtual appliances (download a VM applience for specific application or service)

Prototyping and setting VMs to run on a remote server or/and cloud.

Multiple Operating systems on a desktop.

3.2. Original Virtualization challenges on x86¶

The virtualization implies sharing of CPU, RAM and I/O between the virtual machines (VMs). The traditional x86 platform was not designed for this.

CPU virtualization challenges: how to share the privileged mode (Ring 0)?

Memory virtualization challenges involve sharing the physical system memory and dynamically allocating it to virtual machines.

Device and I/O virtualization involves managing the routing of I/O requests between virtual devices and the shared physical hardware.

3.3. Virtualization types on x86 platform¶

Operating system virtualization. The system runs with a single Kernel; applications run within ‘containers’ like on different operating systems. Example: Solaris zones, LXC linux containers, Linux OpenVZ, Docker containers.

Hardware Emulation (Full virtualization). A hypervisor presents an emulated hardware to unmodified guest operating systems. Example: VMware desktop/server, VirtualBox, QEMU.

Paravirtualization. A hypervisor multiplexes access to hardware by modified guest operating systems. Example: Xen.

A hardware assist virtualization on re-designed x86 platforms, such as AMD-V and Intel-VT. Example: KVM, VirtualBox, VMware ESX, Hyper-V

3.4. Virtualization with KVM¶

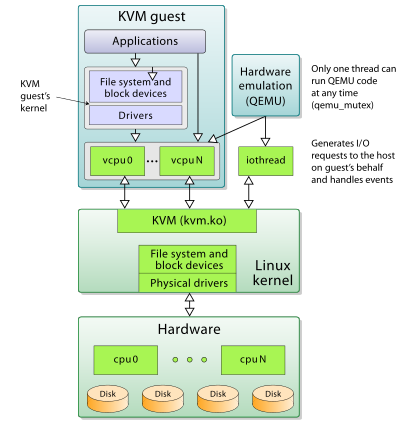

QEMU alone can provide full hardware emulation and system call trapping (Full virtualization).

QEMU + KVM (Kernel Virtual Machine module) is a full virtualization solution for Linux on x86 hardware containing virtualization extensions (Intel VT or AMD-V).

A virtual machine (VM) essentially consists of two parts:

XML configuration file:

/etc/libvirt/qemu/vm.xmlDisk partition or image file, by default:

/var/lib/libvirt/images/vm.qcow2

To see if the processor supports hardware virtualization:

egrep -c '(vmx|svm)' /proc/cpuinfo

If 0 it means that your CPU doesn’t support hardware virtualization.

If 1 or more it does - but you still need to make sure that virtualization is enabled in the BIOS.

Verify that the BIOS has virtualization enabled

kvm-ok

If it shows

INFO: Your CPU supports KVM extensions INFO: KVM is disabled by your BIOS

the Intel Virtualization Technology needs to be enabled in the BIOS. On the other hand, if you see the outbut below, the Intel-VT is enabled.

INFO: Your CPU supports KVM extensions INFO: /dev/kvm exists KVM acceleration can be used

3.5. Management user interface, virsh (Exercise)¶

Command virshis a CLI alternative to the GUI based virt-manager.

Both virsh and virt-manager are parts of libvirt programming interface (API).

virsh can be used, for example, to see the list of running VMs:

virsh list

List of all the VMs:

virsh list --all

Start a VM, kvm1 for example:

virsh start kvm1

To be able to shutdown a VM through virsh, service acpid needs to be installed and running on the VM.

acpid stands for Advanced Configuration and Power Interface event daemon.

Login to the VM, kvm1, through virt-manager and install acpid:

apt-get install acpid

Logout and try to shutdown it through virsh

virsh shutdown kvm1

Verify that it is down

virsh list --all

Start the VM again

virsh start kvm1

In order to be able to access console on the VM, we need to enable ttyS0 service on the VM: Login to the VM, kvm1, through virt-manager as user hostadm. Elavate the privileges to root with command sudo:

sudo -s

Then edit file /etc/default/grub, and assign values to parameters GRUB_CMDLINE_LINUX_DEFAULT, and GRUB_TERMINAL as follows

|

GRUB_CMDLINE_LINUX_DEFAULT="console=tty0 console=ttyS0,115200n8"

GRUB_TERMINAL=serial |

Run command

update-grub

reboot the VM by executing command reboot:

reboot

Close the virt-manager. Try to login to the VM console from the desktop terminal by using command virsh:

virsh console kvm1

To exit from the console, press

^]

3.6. Cloning VMs in virt-manager (Exercise)¶

In default KVM configuration, the VM disk images are stored in directory /var/lib/libvirt/images and the VM configurations in directory /etc/libvirt/qemu.

The image files take large space, so we need to create an additional folder to store them:

mkdir /home/hostadm/KVM

chmod 755 /home/hostadm/KVM

Prepare kvm1 for cloning:

On the VM, edit file /etc/hosts and remove the line containing 127.0.1.1 kvm1. Correct the line starting with ::1.

The file content should look as follows:

output

127.0.0.1 localhost

#The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

Execute command shutdown on the VM:

shutdown now -h

On the desktop, set the readable permission for all on the virtual drive:

sudo chmod a+r /var/lib/libvirt/images/kvm1.qcow2

Launch virt-manager. Right click onto kvm1 and select to “clone”.

Name the cloned system kvm2.

For the storage option, select “Details”,

then specify the new path: /home/hostadm/KVM/kvm2.qcow2

Keep the default configuration settings for the other parameters. This will be a new VM with the systems configurations originally set in kvm1. After the cloning procedure is completed, boot kvm2:

virsh list --all

virsh start kvm2

Login to the system as user hostadm, elevate privileges by running sudo -s, then follow the procedure below to change the host name from kvm1 to kvm2:

Edit file /etc/hostname and replace kvm1 by kvm2.

Reset the machine ID by running the following commands on kvm2:

rm -f /etc/machine-id

rm /var/lib/dbus/machine-id

dbus-uuidgen --ensure=/etc/machine-id

dbus-uuidgen --ensure

Clear the DHCP leased IP settings:

dhclient -r ens3

Execute command reboot on kvm2:

reboot

Exit the VM console with ^] key combination.

Login to kvm2 again after it boots up:

virsh console kvm2

Check if the system recognizes itself as kvm2:

uname -n

Shutdown kvm2 by running command shutdown on it:

sudo -s

shutdown now -h

When the VM console exits, make sure kvm2 is no longer running:

virsh list --all

3.7. Cloning VMs with virt-clone (Exercise)¶

On the desktop, edit file .bashrc in the home directory of hostadm, and add the following line in the end of the file:

export LIBVIRT_DEFAULT_URI='qemu:///system'

This tells the libvirt commands what hypervisor to communicate with, specifically, qemu on the local system.

Run command

source .bashrc

Clone kvm1 to a new VM host, kvm3, by executing the command below:

virt-clone -o kvm1 -n kvm3 -f /home/hostadm/KVM/kvm3.qcow2

Check if the new VM is in the list, start it, then login to its console:

virsh list --all

virsh start kvm3

virsh console kvm3

Fix the host name: Login to the system as user hostadm, elevate privileges by running

sudo -s

then follow the procedure below to change the host name from kvm1 to kvm3:

Edit file /etc/hostname and replace kvm1 by kvm3.

Reset the machine ID by running the following commands on kvm3:

rm -f /etc/machine-id

rm /var/lib/dbus/machine-id

dbus-uuidgen --ensure=/etc/machine-id

dbus-uuidgen --ensure

Clear the DHCP leased IP settings:

dhclient -r ens3

Execute command reboot on kvm3:

reboot

Exit the VM console with ^] key combination.

After the reboot, the new VM should come up with the correct host name and the MAC address.

3.8. Accessing the virtual drive of a VM (Exercise)¶

If a VM fails to boot for some reason, you may need to access the virtual drive, analyse the system logs, and fix the configuration. Below is the procedure how to mount the qcow2 disk image.

Shutdown kvm3

virsh shutdown kvm3

Load nbd kernel module for two partitions. It allows to create a mountable block device from the qcow2 file:

sudo -s

modprobe nbd max_part=2

qemu-nbd --connect=/dev/nbd0 kvm3.qcow2

Create a mounting point, /mnt/vm, and mount the root partition of the drive:

mkdir -p /mnt/vm

cd KVM

fdisk /dev/nbd0 -l

mount /dev/nbd0p1 /mnt/vm

Now you should be able to access the virtual drive content in directory /mnt/vm

cd /mnt/vm

ls

cat etc/hostname

Unmount and disconnect the drive:

cd

umount /mnt/vm

qemu-nbd --disconnect /dev/nbd0

3.9. Delete VM kvm3 (Exercise)¶

To delete a VM, you need, first, to shutdown the VM, then run command virsh undefine for the VM:

virsh undefine kvm3

Now you can delete the VM disk images:

rm KVM/kvm3.qcow2

Check what other VMs are registered with your hypervisor:

virsh list --all

Undefine CentOS7 and centos8.1 (they have come incidentally with your desktop installation):

virsh undefine CentOS7

virsh undefine centos8.1

3.10. Deployment a CentOS appliance VM (Exercise)¶

Download a tar ball with CentOS VM into /tmp and extract its content into KVM directory:

cd /tmp

wget http://capone.rutgers.edu/coursefiles/CentOS7_VM.tgz

cd /home/hostadm/KVM

tar -zxvf /tmp/CentOS7_VM.tgz

Copy the xml file with VM configuration into directory /etc/libvirt/qemu as shown below; the img file with the VM root file system stays in KVM directory.

Assign hostadm user and group ownership on the both, xml and img, files:

sudo cp CentOS7.xml /etc/libvirt/qemu

sudo chown hostadm:hostadm /etc/libvirt/qemu/CentOS7.xml

sudo chown hostadm:hostadm CentOS7.img

Define the new VM configuration in KVM:

sudo virsh define /etc/libvirt/qemu/CentOS7.xml

Start the new VM:

virsh start CentOS7

Login to the new VM console as user hostadm with password unisys:

virsh console CentOS7

Shutdown CentOS7 with console command:

shutdown -h now