Configuration Management

Contents

Configuration Management¶

Outline of the topic:¶

Major HPC installation types and configuration management challenges

Detail what configuration management is and why it is useful

Review the current landscape of available tools

Ansible basics and examples:

configuration files

playbooks

commands for remote tasks

Major HPC installation types and management challenges¶

Traditional diskful (“stateful”) compute nodes¶

The operating system and applications reside on the local system drive.

They are preserved at system reboot.

Configuration management challenge:

keeping the OS and apps identical across the cluster nodes.

Configuration tools: ansible, puppet, chef, salt, cfengine.

This is the type of installation we have here, at the LCI workshop.

Network booted diskless (“stateless”) compute nodes¶

Operating system boots up from network.

The root file system

/resides either in ramdisk or nfs-root (ro-mounted).Applications can be either in ramdisk, or on nfs (

ro-mounted), or on local disk (satelite installation).Configuration management challenges:

ramdisk (initrd) configuration

possible excessive network traffic

nfs-root redundancy and caching

maintain identical software content on a local disk for satelite systems.

Configuration tools: initramfs-tools, dracut, xCAT, Warewulf3 (outdated).

Defining Configuration Management¶

At its broadest level, the management and maintenance of operating system and service configuration via code instead of manual intervention.

More formally:

Declaring the system state in a repeatable and auditable fashion and using tools to impose state and prevent systems from deviating

State¶

All system have a ‘state’ comprised of:

Files on Disk

Running services

State can be supplied by:

Installation / provisioning systems

Golden Images

Manual steps including direct configuration changes and setup scripts

Modern Configuration Management Features¶

Idempotency

Declaration and management of files and services to reach a ‘desired state’

Revision Control

Systems are managed with an ‘Infrastructure as code’ model

Composable and flexible

Benefits of configuration management¶

Centralized catalog of all system configuration

Automated enforcement of system state from an authoritative source

Ensured consistency between systems

Rapid system provisioning from easily-composed components

Preflight tests to ensure deployments generate expected results

Collection of system ‘ground truths’ for better decision making

Modern configuration-management systems¶

Puppet

Ruby based

Chef Infra

Ruby based

CFEngine

C based

Salt

Python based

Ansible

Python based

How Ansible works¶

Ansible connects to compute nodes via

sshas a regular user.Needs either public-private key for the user running ansible, or host based authentication configured.

Forks several instances to ssh concurrently to multiple nodes.

Elevates root privileges via

sudo.Needs sudo privilege on the nodes for a user running ansible.

Runs configuration/management tasks via

pythonmodules.Needs python3 as well as Ansible python modules (ansible-core) installed.

The tasks are defined in Ansible

playbooks(yaml files).The admin needs to understand yaml syntax for ansible tasks.

Doesn’t touch the system and configuration if they are already in the desired final target state.

A simple Ansible setup example¶

Ansible file structure for MPI installation on the compute nodes. All the files are under:

Lab_MPI

\Ansible

├── ansible.cfg

├── Files

│ └── openmpi-4.1.5-1.el8.x86_64.rpm

├── hosts.ini

├── install_mpi.yml

└── setup_mpiuser.yml

The main config file, ansible.cfg on our cluster¶

[defaults]

inventory = hosts.ini

remote_user = instructor

host_key_checking = false

remote_tmp = /tmp/.ansible/tmp

interpreter_python = /bin/python3

forks = 4

[privilege_escalation]

become = true

become_method = sudo

become_user = root

become_ack_pass = false

Inventory (hosts) file hosts.ini¶

[all_nodes]

compute1

compute2

compute3

compute4

[head]

localhost ansible_connection=local

Package installation example, install_mpi.yml¶

The file can have any name.

The extension is

.yaml.The configuration syntax is Yaml.

Ansible spools the rpm file,

openmpi-4.1.5-1.el8.x86_64.rpm, into local/tmpdirectory on the nodes, then installs it.All the work is done by the

tasks:

---

- name: Install a package on the head and compute nodes

hosts: head, all_nodes

gather_facts: no

tasks:

- name: copy mpi rpm file

ansible.builtin.copy:

src: Files/openmpi-4.1.5-1.el8.x86_64.rpm

dest: /tmp

owner: root

group: root

mode: '0644'

- name: install openmpi

ansible.builtin.dnf:

name: /tmp/openmpi-4.1.5-1.el8.x86_64.rpm

disable_gpg_check: yes

state: present

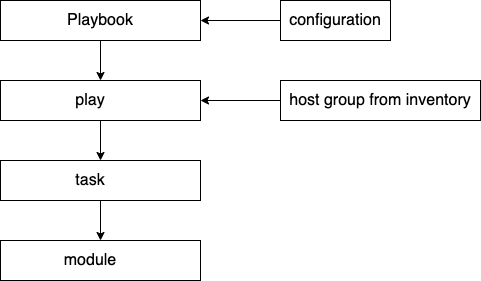

Ansible organization: playbook, play, role, and task.¶

Plays are associated with groups of hosts in the inventory.

Roles contain collections of reusable tasks.

Tasks perform all the work by utilizing modules.

Ansible modules¶

The modules are used by tasks to do work.

The most commonly used modules:

copy files:

ansible.builtin.copyset file attributes:

ansible.builtin.fileinstall packages:

ansible.builtin.dnfandansible.builtin.aptexecute shell commands:

ansible.builtin.shellrestart a service:

ansible.builtin.service

To see all installed on your system modules:

ansible-doc -l

Read the info on a specific module, for example,

ansible.builtin.file:ansible-doc ansible.builtin.file

Examples of utilizing ansible.builtin.shell module for a remote command¶

Use module

ansible.builtin.shellfor a remote command.For example, check the status of

slurmdon all the nodes:

ansible all -m ansible.builtin.shell -a "systemctl status slurmd"

Restart

slurmdon compute1:

ansible compute1 -m ansible.builtin.shell -a "systemctl restart slurmd"

Ansible playbook development steps¶

Set Configuration files:

ansible.cfgandhosts.ini.Identify groups of hosts to execute identical tasks on (plays)

Define the top-level playbook tasks (roles).

Add features you need in new yaml files.

Place configuration files and packages in the

filesdirectories for the roles.Tag the tasks for debugging purposes.

Check the playbooks for syntactic errors:

ansible-playbook playbook.yml --syntax-check

Perform a dry run:

ansible-playbook playbook.yml --check

List the tagged tasks:

ansible-playbook --list-tags playbook.yml

Run only the tagged task in the playbook with tag

compilation, for example:

ansible-playbook --with-tags compilation playbook.yml

Run the playbook:

ansible-playbook playbook.yml